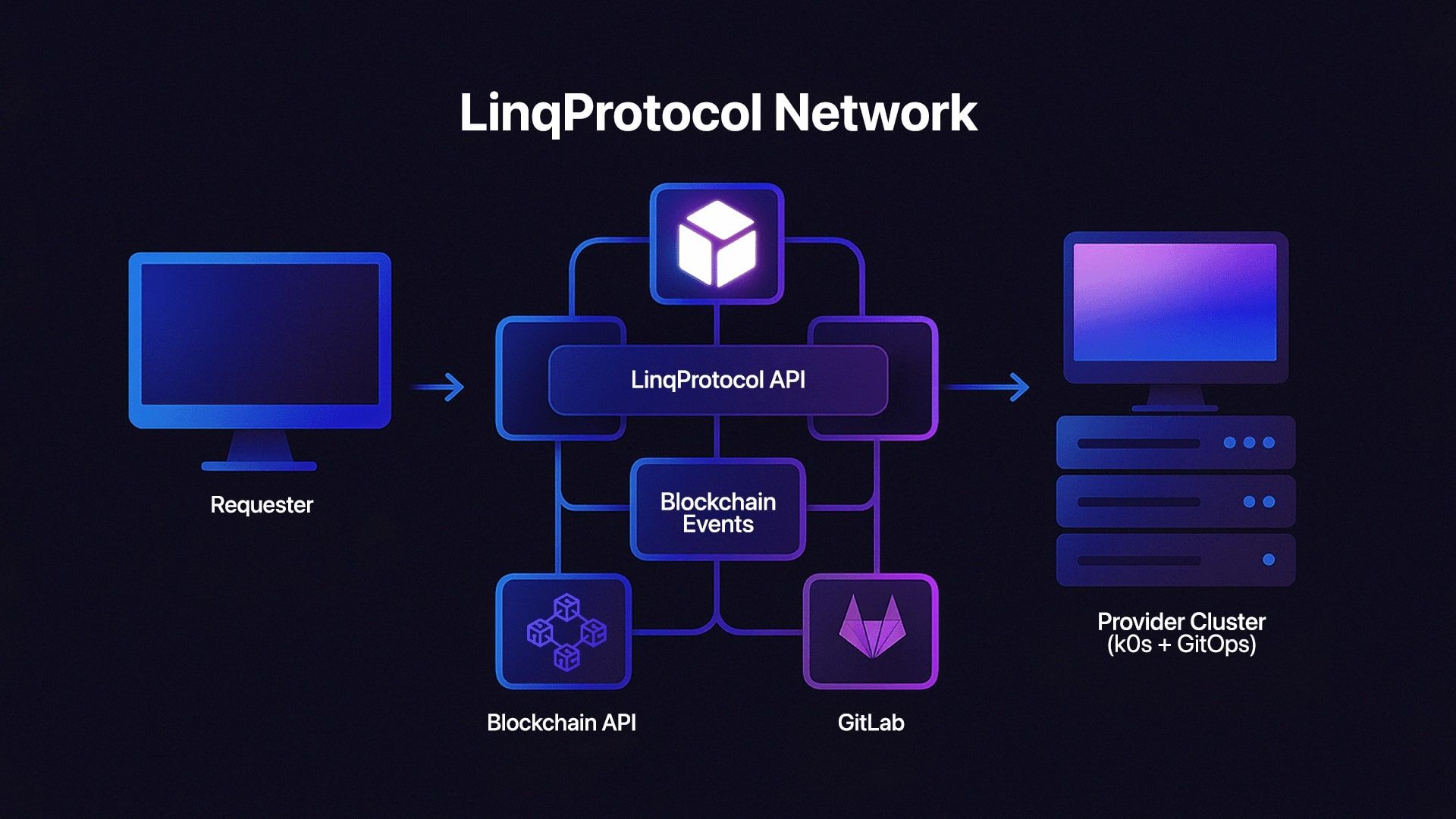

LinqProtocol turns raw, geographically-scattered hardware into a single, on-demand super-cluster. In the demo you’re about to watch, one installer and one API call move a real application (Open-WebUI in this case) from a requester’s laptop in one country to a provider’s cluster on another continent—no VPN, no manual SSH, no central cloud bill. Everything you see runs on open-source tooling and settles to an EVM-compatible blockchain for auditability.

Open source isn’t just a buzzword – it’s the backbone of modern computing. In fact, 97% of today’s applications incorporate open-source code and 90% of companies are using open-source software in some way. The collaborative development model has unlocked unprecedented innovation at an industry-wide scale. Millions of developers around the world choose to build in the open because it fosters trust, transparency, and rapid evolution. This is especially critical for a project like LinqProtocol, which aims to create an open, trustless compute network where transparency and community collaboration are paramount.

The scale of the open-source ecosystem is staggering. Already in 2022 the Linux Foundation’s Cloud Native Computing Foundation (CNCF) alone hosted 157 projects with over 178,000 contributors worldwide. On GitHub, more than 150 million people collaborate on over 420 million projects – a testament to how global and ubiquitous open-source development has become. Major tech companies actively participate too; for example, Intel contributed about 12.6% of recent Linux kernel changes (nearly 97k lines of code) while Huawei contributed almost 9%. This broad base of contributors – from individual enthusiasts to corporate engineers – means that open-source projects benefit from diverse expertise and peer review. The result is software that’s more robust and secure, which is exactly what a trustless infrastructure requires.

Open source has quite literally built the internet. 96.3% of the top one million web servers run on Linux (an open-source OS), and every single one of the top 500 supercomputers in the world runs Linux as well. Core protocols and platforms that power our daily lives – from web servers like Apache and Nginx, to programming languages and libraries, to container platforms like Docker and Kubernetes – all thrive as open-source projects. Without this foundation, a platform like LinqProtocol simply couldn’t exist. We’re standing on the shoulders of giants, and we believe in giving back to that same community. By eventually building LinqProtocol completely in the open, we will ensure that anyone will be able to inspect the code, contribute improvements, or spin up a node to join the network. This openness is not just philosophy; it’s a practical choice to earn the trust of developers and users. An open, trustless compute network must be verifiable and accessible to all – values that only an open-source approach can fully guarantee.

LinqProtocol’s production stack is already doing some heavy lifting implied by the demo video. Coordination starts on-chain: every job offer, bid, and settlement is governed by a set of EVM-compatible smart contracts that live on whichever network a deployment requires—mainnet, side-chain, or roll-up. Because the marketplace logic runs entirely in the open, anyone can audit how work is assigned and how funds flow; there is no hidden scheduler or payment server that could become a single point of failure or trust.

Out at the edge, each provider runs a lightweight LinqProtocol client/cluster. Once installed it registers the node’s capabilities, listens for matching jobs, and—subject to the operator’s own policy—pulls the workload and stands it up automatically. Monitoring, error-handling, and basic remediation are baked in, so an individual with a spare workstation and a hyperscale datacentre can both “plug in and walk away.” This self-governing agent model lets the network grow horizontally without a ballooning ops team.

Workloads themselves run inside OCI-compliant containers. When a task is awarded, the client/cluster fetches an image that already includes every library, binary, or model the job needs; it then launches the container with strict resource limits. Containerization guarantees that a service will behave the same on Ubuntu, Fedora, or Windows and, just as importantly, that the provider’s host stays clean and secure. Developers don’t have to learn anything new—if an application runs on Docker or Kubernetes today, it will run on LinqProtocol tomorrow.

LinqProtocol utilizes a selection of open-source tools chosen for their contributions to decentralization, autonomy, and low-friction scalability.

In sum, the tech choices in LinqProtocol – from blockchain coordination to containerization and networking – all serve our goals of decentralization, autonomy, and low-friction scalability. By building on open-source technologies and contributing back improvements, we will ensure that our compute network remains transparent and extensible. Anyone can inspect how it works, run their own nodes, or even propose changes, which is exactly how an open, trustless infrastructure should evolve.

LinqProtocol’s core services are up and running under development today, but the platform is still in its infancy relative to the scale we envision. The milestones below outline where we intend to take the network next. We describe them in functional terms—exact component choices may evolve as the open-source landscape and community needs change.

Observability & Trust Metrics

We plan to weave continuous telemetry into every layer of the system with something like a “LGTM stack”. Each node will eventually report uptime, resource utilization, logs, job success rates and other signals into a shared metrics plane. Those data points will roll into a transparent scoring model so that the scheduler—and the community—can spot unreliable nodes early and reward consistent ones. Our goal is a self-healing fabric in which reputation is earned through demonstrable performance rather than central approval.

Smarter Scheduling & Elastic Workloads

As the number of providers grows, the scheduler needs to place work where it will finish fastest and cheapest. We expect to broaden the current logic so it can break large, parallel-friendly jobs into shards, match each shard to hardware that fits (CPU, GPU, RAM, bandwidth), and even run redundant slices when a task demands high assurance. The long-term vision is a global pool that behaves like a single, elastic super-cluster—no matter whether the underlying nodes live in data centres or edge devices.

Zero-Touch Onboarding

Joining the network should feel like flipping a switch. New providers will eventually install the agent, answer a few prompts, and begin earning in minutes—no manual port-forwarding, DNS tweaks or firewall acrobatics. On the requester side, service endpoints generated by a job will be routed automatically so that users can hit an HTTPS URL without thinking about ingress plumbing. Removing that friction is key to exponential network growth.

Secure Node-to-Node Networking

A key milestone on our roadmap is to let workloads open encrypted pipes directly between collaborating nodes. Once that foundation is in place, we’ll evolve it into a self-organising mesh that links every cluster and sub-cluster. The vision: traffic between any two nodes—whether they sit in the same rack or on opposite continents—travels end-to-end encrypted, can reroute around failures automatically, and copes with NAT traversal, dynamic membership, and identity management. Achieving that will keep the compute layer fully peer-to-peer and independent of any single cloud or region.

Confidential Compute & Hardware Trust

Certain jobs will require guarantees that even the host operator cannot inspect code or data. We intend to integrate confidential-container runtimes such as Kata Containers, backed by hardware Trusted Execution Environments (Intel SGX, AMD SEV and successors). In practice, requesters will be able to flag a task as “confidential,” and the scheduler will route it to an attested node that proves—cryptographically—that it can execute inside a secured enclave. Over time, we aim to extend this model to support emerging isolation technologies and encrypted image formats.

Developer Experience & Open APIs

LinqProtocol already exposes REST and RPC endpoints, but we want to streamline the path from idea to production. Planned improvements include richer SDKs, a self-service web console, and policy tooling that lets providers set usage caps or pricing rules in plain language. All interfaces will remain open and schema-driven so that integrators can build custom tooling or plug the network into existing CI/CD pipelines with minimal effort.

Looking Further Ahead

Beyond these near-term goals, we are researching verifiable-compute techniques (e.g., proof-of-execution models) to attest that results are correct; on-chain governance so the community can steer protocol upgrades; and support for specialised accelerators such as FPGAs and new generations of GPUs. In every case, the guiding principle remains the same: leverage open standards, collaborate in the open, and keep the barrier to entry low for both providers and developers.

Building an open, trustless compute network is not something we can (or want to) do alone. We invite you – the engineers, open-source contributors, cloud-native builders, and dreamers – to join us in this journey. There are several ways to get involved and we encourage you to take part in whichever way resonates with you:

Join our Community

Start by joining the LinqAI community channels (Discord, Telegram, etc.). There you can discuss ideas, ask questions, and get the latest updates. Early community members help shape the direction of the project with their feedback. We’re eager to hear your thoughts on everything from feature ideas to real-world use cases you’d like to see supported. Open dialogue with fellow enthusiasts and our core team will ensure we build something that truly serves its users.

Contribute Code & Expertise

LinqProtocol is still in closed development right now but will soon become an open-source project, and we welcome contributions. Whether you’re a blockchain developer, a Kubernetes expert, a security researcher, a networking nerd, or a documentation guru – your expertise can leave a mark. Keep an eye out for our GitLab/GitHub repositories (we’ll be open-sourcing components as they mature) and see where you can jump in. Tackle a good first issue, improve a module, or even propose a new component. We will follow a typical open-source contribution process (fork, pull request, code review) and our team is committed to helping new contributors get onboarded. By contributing, you won’t just be writing code; You’ll help architect the future of decentralized computing. If coding isn’t your thing, you can still contribute by writing docs, creating tutorials, or helping with QA and testing. Every bit counts.

Run a Node or Use the Network

If you have access to servers, spare compute power, or even a fleet of edge devices, consider becoming a LinqProtocol provider as soon as we launch on a test net. The earlier you join, the more you can help us learn and improve the system (and the sooner you can start earning rewards for contributing your compute). Our documentation will guide you through setting up the LinqProtocol client on your hardware. It will be relatively straightforward – we aim for a one-line run command or similar simplicity. By running a node, you become a part of the network’s backbone, and you’ll be directly contributing to making the network more robust and decentralized. On the flip side, if you have compute-heavy tasks that could benefit from a distributed execution, get ready to submit a job on LinqProtocol. Your feedback on that user experience will be invaluable. We want developers to view this network as a viable alternative to traditional cloud for certain workloads, and real usage and feedback will help get us there.

Spread the Word and Collaborate

Advocacy is a huge part of open source. If LinqProtocol’s vision excites you, spread the word to colleagues or friends in the industry. You might know teams grappling with scaling computations or researchers who need affordable GPU time – let them know there’s an open alternative emerging. Furthermore, we’re looking to collaborate with other open-source projects and communities. Perhaps you maintain a project that could integrate with LinqProtocol, or you see a way to combine forces (for example, a data science tool that could use LinqProtocol as its execution backend). Reach out! Open-source thrives on cross-pollination of ideas. By building bridges between projects, we can accelerate each other’s development. Our arms are open to partnerships that advance the state of decentralized and autonomous computing.

The call to action is simple, be a part of LinqProtocol in whatever way you can. This project isn’t a closed product being handed down; it’s a living network that grows stronger with each new participant. Whether you contribute code, run a node, or simply give us a shout-out, you are helping to shape the future of compute. The problems we’re tackling – breaking the reliance on centralized clouds, making computation trustless, utilizing idle resources globally – are ambitious. But with a passionate community, we believe no problem is too hard. Your involvement is the catalyst that will turn this vision into reality.

We stand at an inflection point in the evolution of cloud computing. Just as open-source software dominated the world over the past two decades, decentralization and community-driven infrastructure are poised to redefine how we think about compute. LinqProtocol’s vision of an open, trustless compute network is bold, but it’s also a natural continuation of the path that brought us here. After all, the internet itself was built on open protocols and collaboration across organizations. Now, we’re extending that spirit to the realm of computational power – making it borderless, permissionless, and owned by no one and everyone at the same time.

Our journey is just beginning, but momentum is on our side. Every week, we see growing interest from developers and partners who share our belief that the future of compute should be as open as the software running on it. By leveraging open-source technologies and engaging a global community, we are ensuring that LinqProtocol will remain adaptable, transparent, and innovative. There’s a sense of inevitability here: just as Linux and cloud democratized computing, a decentralized network like ours can democratize who provides and accesses that computing power. The implications are vast – imagine a world where expensive cloud clusters give way to a vast grid of everyday devices working in concert, where no single corporation can dictate prices or policies, and where innovation isn’t bottlenecked by access to infrastructure. That’s the world we’re striving to enable.

None of this can happen in a vacuum. It will take the collective effort of a community of open-source enthusiasts, seasoned engineers, and brave early adopters to push this project forward. The good news is that if you’ve read this far, you’re likely one of them. Together, we can prove that an open model not only matches the old way of doing things, but surpasses it – in resilience, in cost-efficiency, in trustworthiness. LinqProtocol is more than a project; it’s a movement towards a future where compute is a common good, just like the open-source software we all rely on.

In closing, we want to reinforce our commitment: LinqProtocol will always prioritize openness, community, and trustless design. Those principles are our North Star. We’re incredibly excited for what’s to come, and we’re honored to be on this journey with all of you. Let’s build this future together – The era of open compute is dawning, and with your help, LinqProtocol will be a driving force in making it a reality. Here’s to an open cloud and the brilliant, decentralized future ahead.

Sources & Further Reading